Microsoft unveils 'PyRIT' toolkit to help safeguard Generative AI systems

Key notes

-

Software giant introduces open-source framework to enable security professionals to proactively locate vulnerabilities in AI models.

Microsoft today announced the release of PyRIT (Python Risk Identification Toolkit), an open-source automation framework that empowers security teams to identify risks within generative AI systems. The move underscores Microsoft’s deep commitment to responsible AI development and building secure tools for the rapidly expanding generative AI landscape.

AI Red Teaming Automation: A Necessity

Red teaming, the process of simulating attacks to test defenses, is crucial for generative AI. However, these systems are complex, with multiple failure points, unlike traditional software. Microsoft’s extensive experience in AI red teaming led to the creation of PyRIT, addressing the unique challenges posed by generative AI.

“While automation cannot fully replace human red teamers, it is essential for scaling efforts and highlighting areas requiring deeper investigation,” Microsoft stated.

PyRIT: Key Features and Benefits

- Adaptability: Works with various generative AI models and can be extended to support new input types (e.g., images, video).

- Risk-Focused Datasets: Enables testing for both security issues and potential biases or inaccuracies.

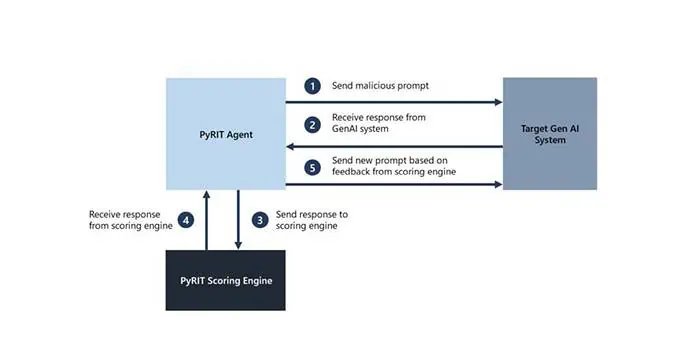

- Flexible Scoring Engine: Allows scoring of AI outputs using machine learning or direct LLMs for self-testing.

- Multi-Turn Attack Strategies: Simulates more realistic, persistent attacks for in-depth testing.

- Memory Capability: Facilitates analysis and allows for longer, more complex interactions.

Industry-Wide Impact

PyRIT’s release is set to make waves in the AI security industry. Microsoft encourages organizations across sectors to utilize PyRIT in their generative AI security efforts. The company also invites collaboration, emphasizing that a concerted effort is crucial for building robust defenses against emerging AI risks.

It is important to note that PyRIT is not a replacement for manual red teaming of generative AI systems. Instead, it augments an AI red teamer’s existing domain expertise and automates the tedious tasks for them.

You can get started with the PyRIT project here.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages