Stability AI releases Stable LM 2 1.6B that outperforms Microsoft's Phi-2 2.7B model

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Small language models (SLMs) are getting quite popular these days. Since last year, Microsoft Research has been releasing a suite of small language models (SLMs) called “Phi”. In December, Microsoft Research released Phi-2 model with 2.7 billion parameters. This new model delivered state-of-the-art performance among base language models with less than 13 billion parameters.

Today, Stability AI announced the release of Stable LM 2 1.6B, a state-of-the-art 1.6 billion parameter small language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch.

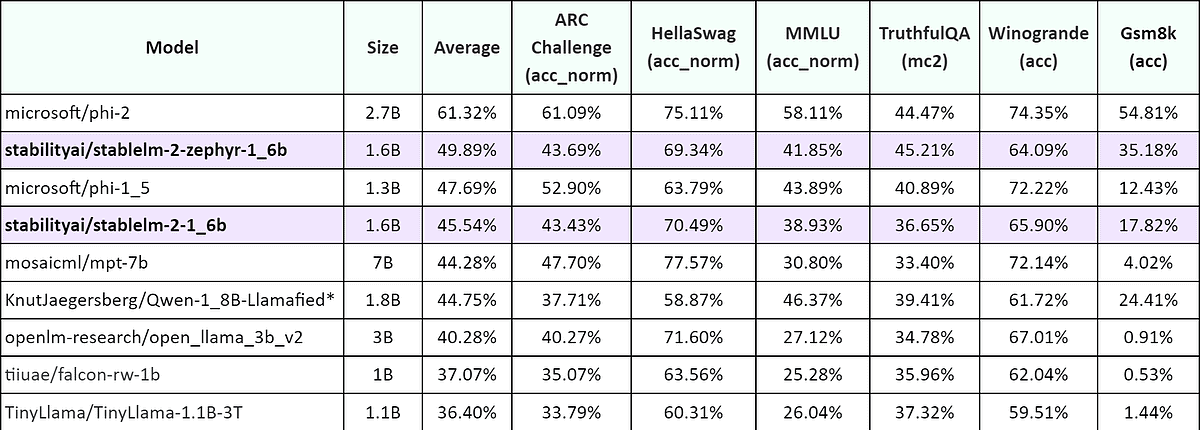

When compared to other popular small language models such as Microsoft’s Phi-1.5 (1.3B) & Phi-2 (2.7B), TinyLlama 1.1B, or Falcon 1B, Stable LM 2 1.6B performs better on most tasks. You can check out the benchmarks data below.

Since this model is also trained on multilingual text, it outperforms other comparable models by a considerable margin in the translated versions of ARC Challenge, HellaSwag, TruthfulQA, MMLU, and LAMBADA.

“By releasing one of the most powerful small language models to date and providing complete transparency on its training details, we aim to empower developers and model creators to experiment and iterate quickly,” wrote Stability AI team.

Stable LM 2 1.6B model is now available both commercially and non-commercially with a Stability AI Membership. You can explore the model on Hugging Face now.