New York Times will use AI, but how does journalism feel about it?

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Despite suing OpenAI and Microsoft a while ago for allegedly using its copyrighted articles to train AI models like ChatGPT without permission, the New York Times is now embracing AI tools.

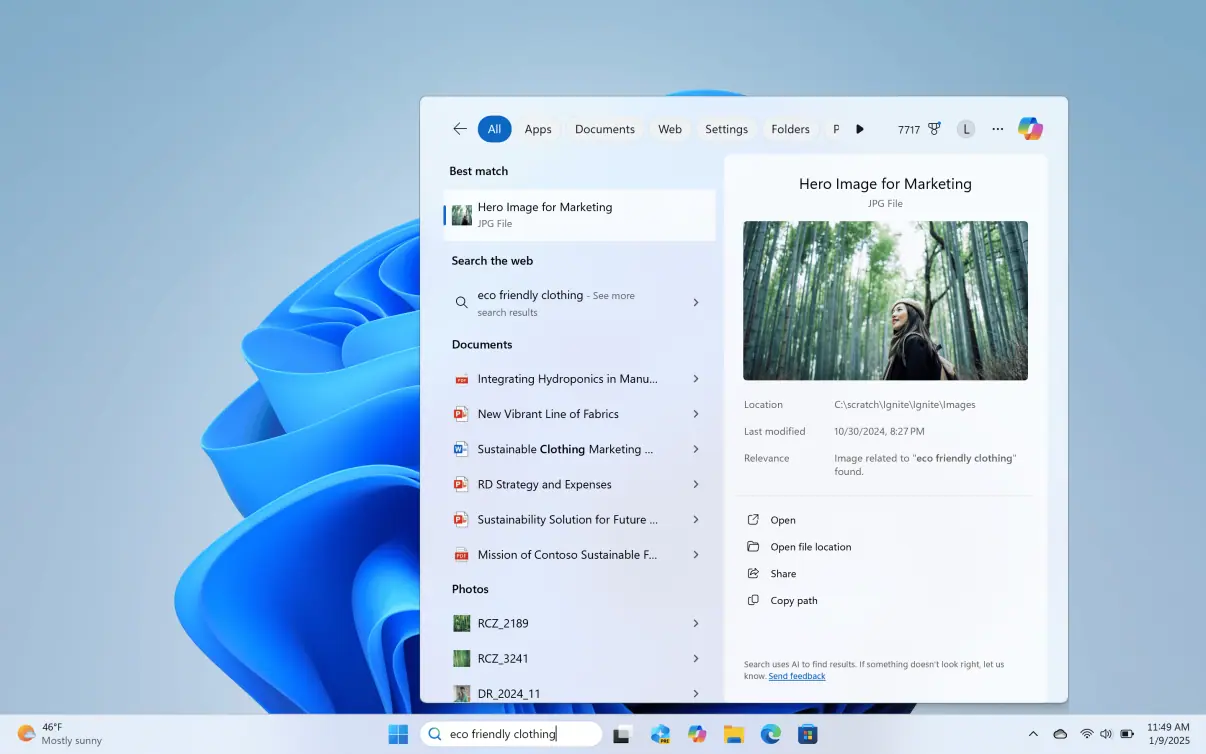

Semafor has received intel from the inside of the New York Times: a new AI tool called Echo that will be used by both editorial and product divisions. The publication is also allowing staff to use a variety of AI products like GitHub Copilot, Google’s Vertex AI, and OpenAI’s non-ChatGPT API.

Staff are encouraged to use AI for tasks such as generating SEO headlines, summarizing articles, and brainstorming content ideas. Though, the Times has set boundaries, restricting AI use for tasks like drafting or significantly revising articles, or publishing machine-generated content without proper labeling.

But how does the journalism world feel about it?

A recent report on AI in journalism shows that both news audiences and journalists have concerns about using AI tools, like chatbots and image generators, in newsrooms.

Based on research from seven countries, the study, which was quoted by The Conversation, found that people are more comfortable with AI being used for tasks like transcribing or brainstorming, rather than editing or creating content.

The report also mentions problems with AI spreading wrong information, like false news alerts and fake images, and stresses the need for transparency and ethical AI use. That, unfortunately, seems all too familiar, especially after Apple Intelligence’s AI summary feature mistakenly said that Luigi Mangione, the suspect in a healthcare CEO murder, had shot himself.

User forum

0 messages