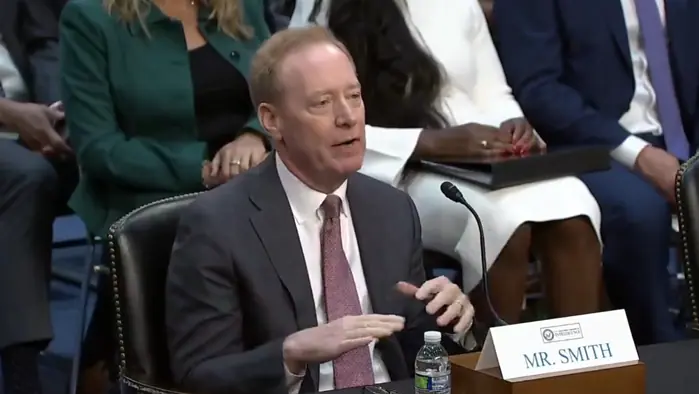

Microsoft's Brad Smith pushes for AI deepfake law before 2024 elections, but odds are slim

Microsoft, Google, Adobe, and other tech giants are a part of the C2PA initiative

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Brad Smith urged new laws for AI deepfakes and misleading ads before the Senate.

- Microsoft is working with C2PA to verify AI-generated content, with Google integrating these standards.

- However, Congress is unlikely to pass new AI regulations before the 2024 elections.

Microsoft’s Vice Chair & President, Brad Smith, has testified recently before the Senate Intelligence Committee ahead of the 2024 US elections that’ll be held in November.

Smith recommended Congress pass a federal deepfake fraud statute to address AI-generated scams, mandate advanced labeling for synthetic content to build trust, and enact the bipartisan Protect Elections from Deceptive AI Act to prevent misleading political ads except for satire.

“We need to give law enforcement officials, including state attorneys general, a standalone legal framework to prosecute AI-generated fraud and scams as they proliferate in speed and complexity,” Smith says, encouraging passing the bipartisan Protect Elections from Deceptive AI Act.

Microsoft is a part of the Coalition for Content Provenance and Authenticity (C2PA) initiative alongside Adobe, Google, OpenAI, and other tech giants. The C2PA aims to regulate AI-generated visuals by slapping credentials in them, so it’s easier to spot.

Google also said that it’s incorporating the C2PA standards to track the origin and editing history of images to Google Images, Lens on Chrome, and even YouTube in the coming months.

During the hearing, other top officials from Google, Apple, and Meta were also urged to buckle up and strengthen their efforts to combat foreign influence in the elections.

Senators said that despite ongoing attempts to combat disinformation, foreign actors, particularly from Russia, continue to use tactics like fake news and social media manipulation to sway public opinion.

Despite urgent warnings about the risks posed by AI deepfakes to elections, however, Congress is unlikely to pass any new laws this year to address these issues.

Although there were several proposed bills aimed at regulating AI-generated election content, including bans on deepfakes and mandatory disclosures, legislative efforts have stalled due to partisan disagreements and a packed schedule.

A recent study by Adobe shows many people are cutting back on social media due to concerns about misinformation ahead of the 2024 elections. AI-generated content makes it difficult to tell what’s real—at least 87% of respondents find it harder to distinguish truth from fiction.

User forum

0 messages