Microsoft Research introduces Splitwise, a new technique to boost GPU efficiency for Large Language Models

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Splitwise is a breakthrough in LLM inference efficiency and sustainability.

- By separating the prompt and token phases, Splitwise unlocks new potential in GPU use and enables cloud providers to serve more queries faster under the same power budget.

Large language models (LLMs) are transforming the fields of natural language processing and artificial intelligence, enabling applications such as code generation, conversational agents, and text summarization. However, these models also pose significant challenges for cloud providers, who need to deploy more and more graphics processing units (GPUs) to meet the growing demand for LLM inference.

The problem is that GPUs are not only expensive, but also power-hungry, and the capacity to provision the electricity needed to run them is limited. As a result, cloud providers often face the dilemma of either rejecting user queries or increasing their operational costs and environmental impact.

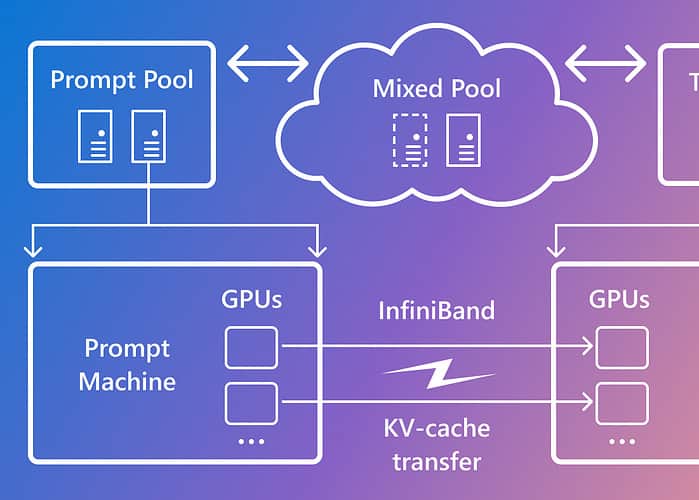

To address this issue, researchers at Microsoft Azure have developed a novel technique called Splitwise, which aims to make LLM inference more efficient and sustainable by splitting the computation into two distinct phases and allocating them to different machines. You can read about this technique in detail in their “Splitwise: Efficient Generative LLM Inference Using Phase Splitting” research paper.

Splitwise is based on the observation that LLM inference consists of two phases with different characteristics: the prompt phase and the token-generation phase. In the prompt phase, the model processes the user input, or prompt, in parallel, using a lot of GPU compute. In the token-generation phase, the model generates each output token sequentially, using a lot of GPU memory bandwidth. In addition to separating the two LLM inference phases into two distinct machine pools, Microsoft used a third machine pool for mixed batching across the prompt and token phases, sized dynamically based on real-time computational demands.

Using Splitwise, Microsoft was able to acheive the following:

- 1.4x higher throughput at 20% lower cost than current designs.

- 2.35x more throughput with the same cost and power budgets.

Splitwise is a breakthrough in LLM inference efficiency and sustainability. By separating the prompt and token phases, Splitwise unlocks new potential in GPU use and enables cloud providers to serve more queries faster under the same power budget. Splitwise is now part of vLLM and can also be implemented with other frameworks. The researchers at Microsoft Azure plan to continue their work on making LLM inference more efficient and sustainable, and envision tailored machine pools driving maximum throughput, reduced costs, and power efficiency.