Microsoft patents an innovative way to bring foveated rendering to regular video streams

The new patent has a lot of potential applications.

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Microsoft filed a new patent that brings foveated rendering to regular video streams.

- The new rendering technique renders only the central part of the image, where the human eye is most sensitive to detail.

- This system will simplify that process & save a lot of time.

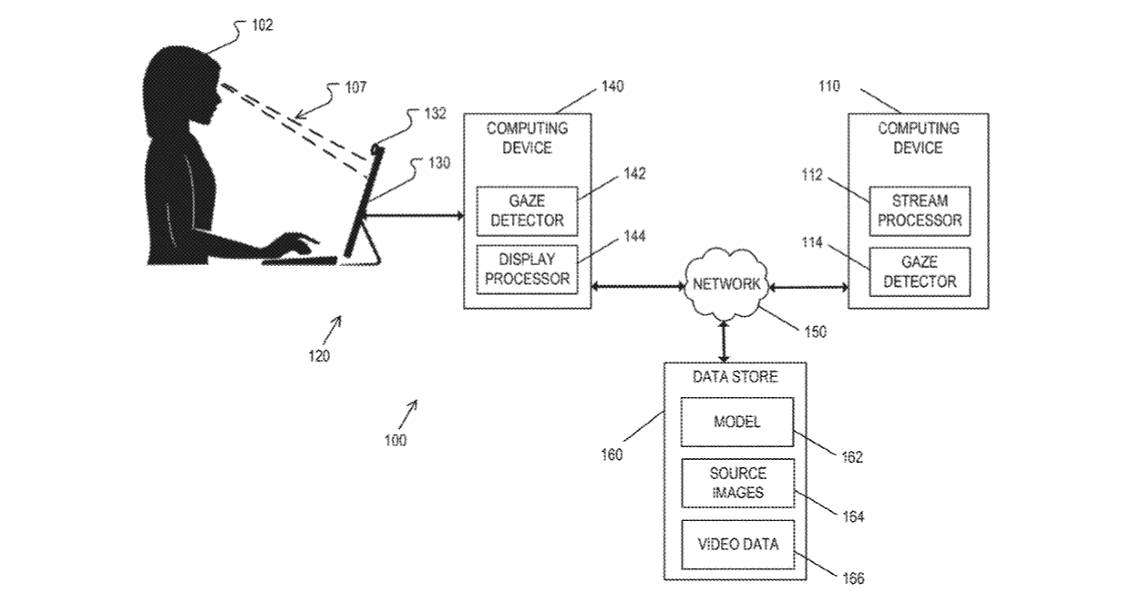

Around the same time as patenting a new way for AI to automatically create help documentation for apps, Microsoft has filed a new patent for a piece of tech that brings foveated rendering to regular video streams.

The application, as published recently on December 7, 2023, by the US Patent and Trademark Office (USPTO) but filed back in August, details a plan that takes advantage of the limitations of the human visual system.

Based on our understanding, the applications of this patent extend beyond video streaming and into the gaming industry. The system can dynamically adjust the quality of video streams based on your gaze location, which can be particularly beneficial for foveated rendering.

Why does it matter? Well, this new rendering technique is usually used by VR games, like Resident Evil 7, and No Man Sky, etc., for example. It renders only the central part of the image, where the human eye is most sensitive to detail, in high resolution.

In general, virtual and augmented reality oftentimes takes advantage of this. Sony’s PlayStation VR, Meta’s Oculus Quest 2, Apple Vision Pro, & even Microsoft HoloLens devices have it, but it’s not as widespread in other areas because accurately tracking a person’s gaze (where they’re looking) is a bit tricky.

So, Microsoft aims to streamline that process. Their method makes it significantly simpler to estimate the gaze locations of users, enabling the implementation of foveated rendering in even regular video streams.

The Redmond-based tech giant also outlines how the method functions. Several video streams are received for transmission to a display device, each with a distinct initial image quality level.

As the system estimates your gaze location on the display device, it selects at least one video stream for processing. The selected stream is then automatically modified based on the estimated gaze location, using a quality level that is lower than the corresponding initial image quality level.

Subsequently, both the processed and unprocessed video streams are transmitted to the display device.

What are your thoughts on this? Do you see this tech being implemented any time soon?