Microsoft and NVIDIA announce the largest and the most powerful language model trained to date

1 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

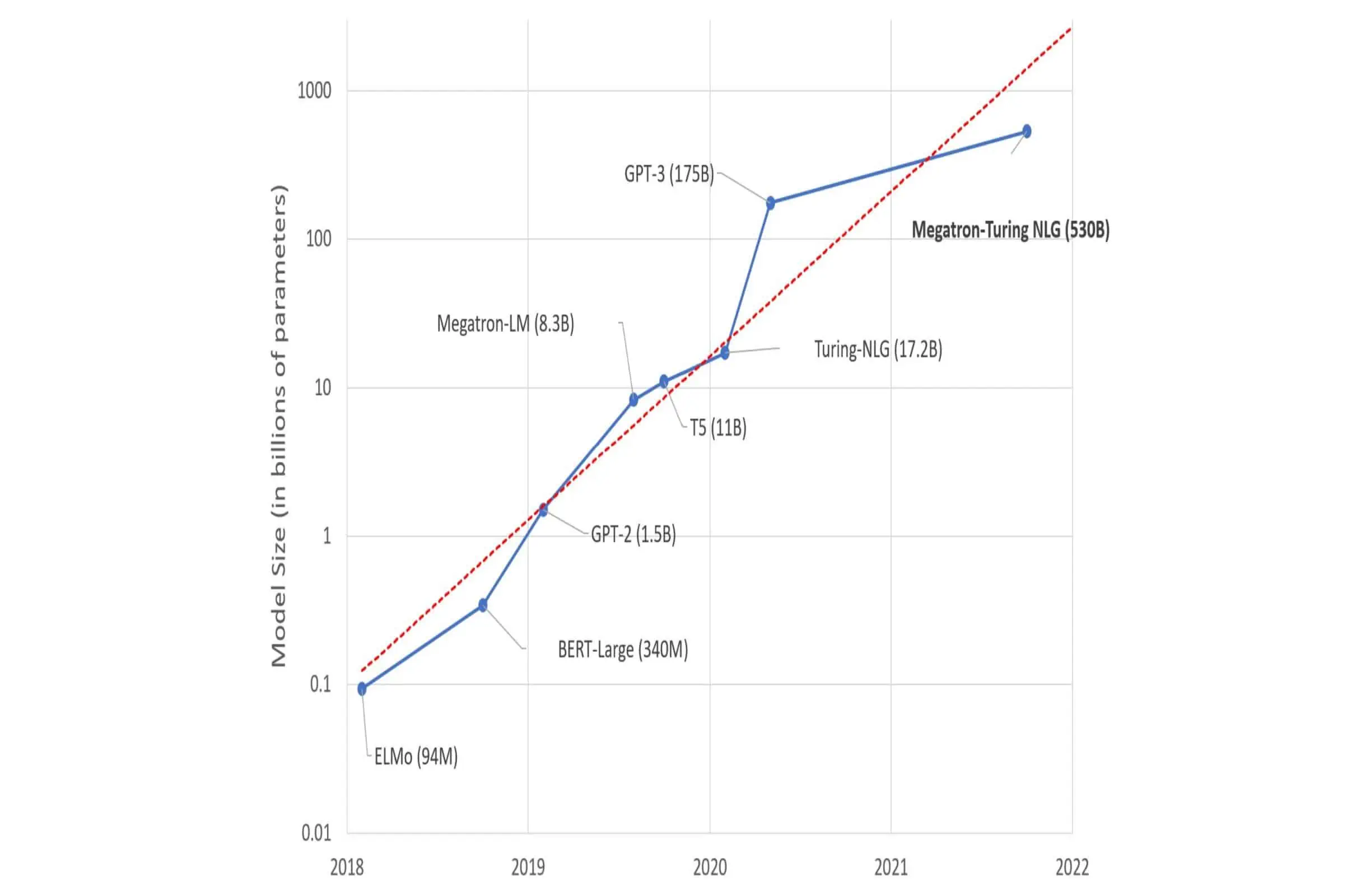

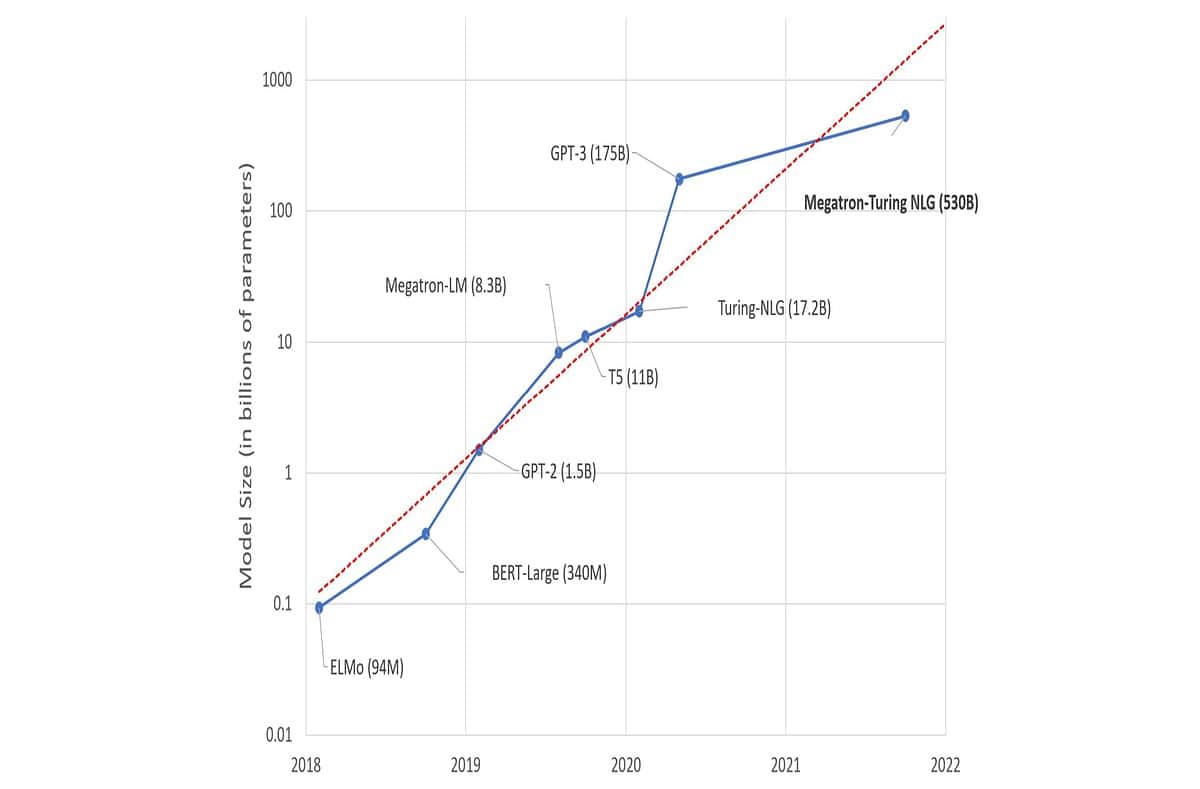

Microsoft and NVIDIA today announced the DeepSpeed- and Megatron-powered Megatron-Turing Natural Language Generation model (MT-NLG), the largest and the most powerful monolithic transformer language model trained to date. This model includes 530 billion parameters, 3x the number of parameters compared to the existing largest model, GPT-3. Training such large model involves various challenges. NVIDIA and Microsoft worked on many innovations and breakthroughs along all AI axes.

For example, working closely together, NVIDIA and Microsoft achieved an unprecedented training efficiency by converging a state-of-the-art GPU-accelerated training infrastructure with a cutting-edge distributed learning software stack. We built high-quality, natural language training corpora with hundreds of billions of tokens, and co-developed training recipes to improve optimization efficiency and stability.

You can learn more about this project from the links below.

User forum

0 messages