Google's Gemini gets new update that lets it "see what you see"

1 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

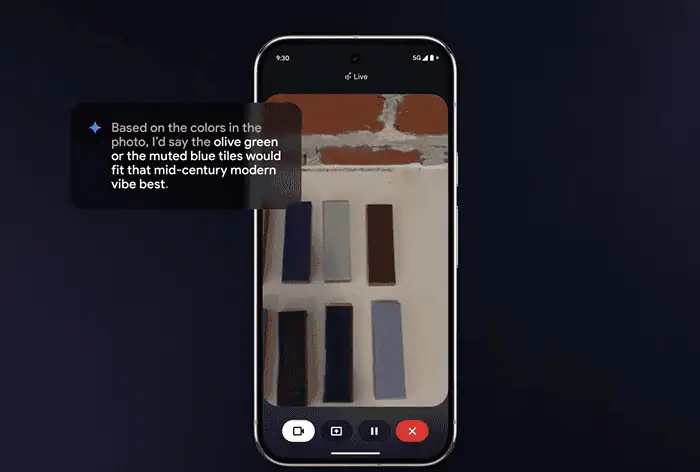

Google has begun rolling out new AI features for Gemini Live, offering real-time interaction through smartphone cameras and screens. Under the “Project Astra” initiative, the updates allow Gemini to understand and respond to visual content directly from a device screen or camera.

Gemini can now “see” your screen

Some Android users, namely those with Gemini Advanced subscriptions, have reported accessing these features. For instance, a Reddit user on a Xiaomi phone demonstrated Gemini’s ability to summarize on-screen content and engage in contextual discussion.

This development puts Google at the forefront of AI assistant technology, with capabilities that exceed those of companies like Amazon’s Alexa Plus and Apple’s Siri. Notably, Gemini will be the default assistant on Samsung phones, further boosting its user base.

The incorporation of live screen and camera analysis into Gemini Live represents Google’s efforts to amplify user interaction using sophisticated AI capabilities. As these capabilities become mainstream, they can be anticipated to impact how you interact with your devices significantly.

User forum

0 messages