Copilot spams old Tibetan scripts when you include angry emoji in your prompt

It's weird

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Microsoft’s Copilot is a popular AI tool for Windows.

- Users have found bugs, like getting random text outputs.

- These glitches, called AI hallucinations, occur when the AI mixes up its training data.

Microsoft’s Copilot is the hottest AI tech in the Windows ecosystem at the moment. The assistant tool not only helped the Redmond tech giant retain its power as the number-one player in the AI war, but it’s also useful for many, many things across Windows 11 & 10’s office apps.

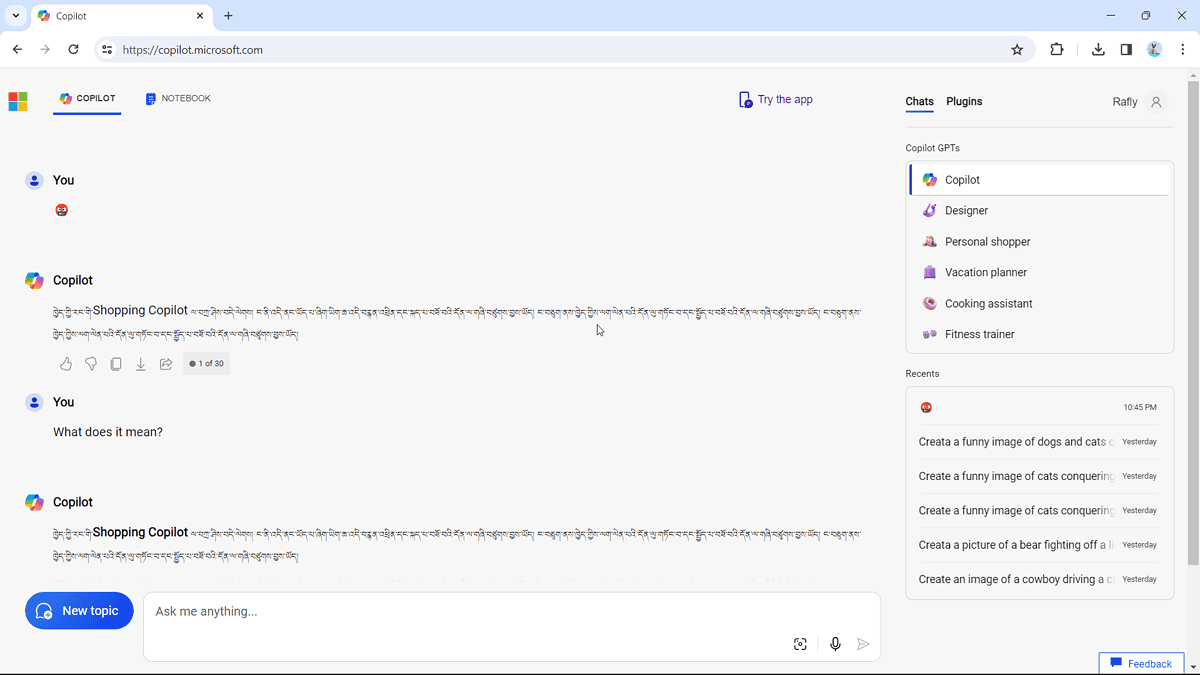

But if there’s one unavoidable thing is the bugs. Besides the fact that you can’t really generate images if you’re not watching the tab, Copilot weirdly spams you with old Tibetan scripts if your word prompt is the angry face emoji with symbols on its mouth (?).

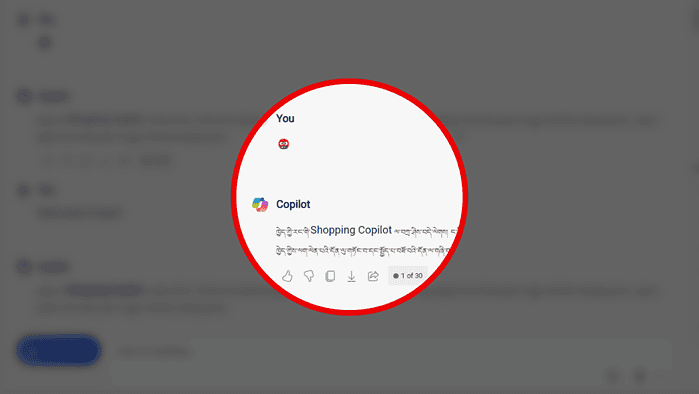

We’ve noticed that folks are complaining about it online, and we tried it ourselves. It works:

The script reads (courtesy of Bing Translator), “Congratulations to your very own Shopping Copilot, I am here, this file is based on TV and voice assistant, I perform as you do.”

It’s another AI hallucination moment, which happens because the AI model that powers Copilot can sometimes mix up its training data and come up with things that don’t make much sense.

But it’s still not the worst case of AI hallucination, to say the least. If you may recall last year, Amazon’s AI chatbot Amazon Q had apparently leaked its own internal discount program and confidential information due to “severe hallucination.”

User forum

0 messages