Anthropic washes hands over claims that its AI scraper hits iFixit's site a million times daily

An AI web scraper collects large amounts of internet data to train its AI models

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Anthropic AI’s web scraper hit iFixit’s site a million times in one day, breaching their terms of service.

- iFixit’s CEO criticized Anthropic and updated their robots.txt file to block the scraper.

- Despite terms of service, Anthropic insists website owners must block their crawler explicitly.

Anthropic AI’s web scraper, used for training its AI chatbot Claude, reportedly hit iFixit’s website nearly a million times in one day, violating iFixit’s terms of service that prohibit such activity without permission.

This heavy scraping burdened iFixit’s servers, prompting CEO Kyle Wiens to publicly criticize Anthropic on social media and modify their robots.txt file to block the bot.

“Hey @AnthropicAI: I get you’re hungry for data. Claude is really smart! But do you really need to hit our servers a million times in 24 hours? You’re not only taking our content without paying, you’re tying up our DevOps resources. Not cool,” he calls out the Amazon-backed AI startup on X.

The boss also says that they provide archives of their content and suggests that AI companies might be using standard blunt instruments, similar to Google’s approach.

Despite iFixit’s terms of service, Anthropic argued that website owners need to explicitly block their crawler to avoid such issues. When confronted about the issue by the press, the AI startup (via 404Media), instead, said that website owners need to explicitly block their crawler.

Training AI models rely on heavy consumption, whether it’s financial, energy, or resources. So much so, that Google and Microsoft, two of the biggest players in the AI race, actually consumed more electricity and made more money than some of the world’s countries.

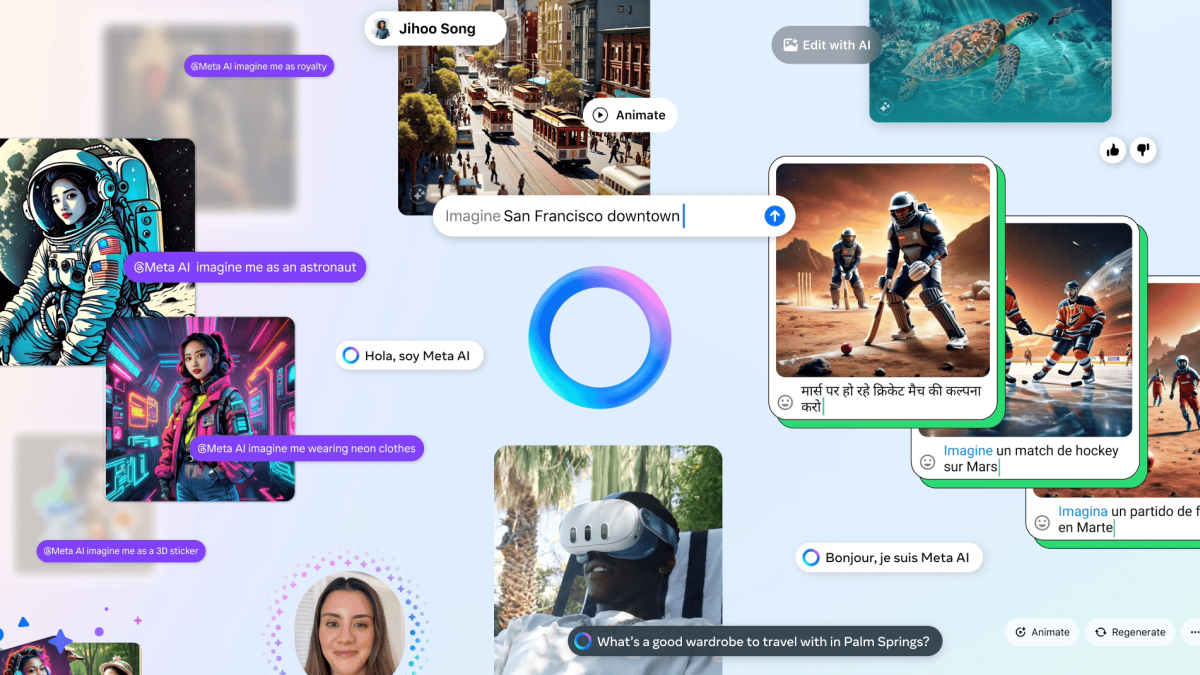

An AI web scraper is a big part of that because it collects large amounts of data from the internet. This data helps the AI learn and improve its abilities to understand and generate text, recognize patterns, and make decisions.

Apple also once faced the same scrutiny as AppleBot, Apple’s web crawler, powers features like Spotlight, Siri, and Safari search. But, still, you can opt out of it by making a few tweaks to your website’s robot.txt file.

User forum

0 messages