Microsoft explains why do LLMs hallucinate and make up answers

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

A recent Twitter exchange between a user and a Microsoft executive has brought renewed attention to the limitations of large language models (LLMs) like Bing and the potential for information gaps when relying solely on their internal knowledge.

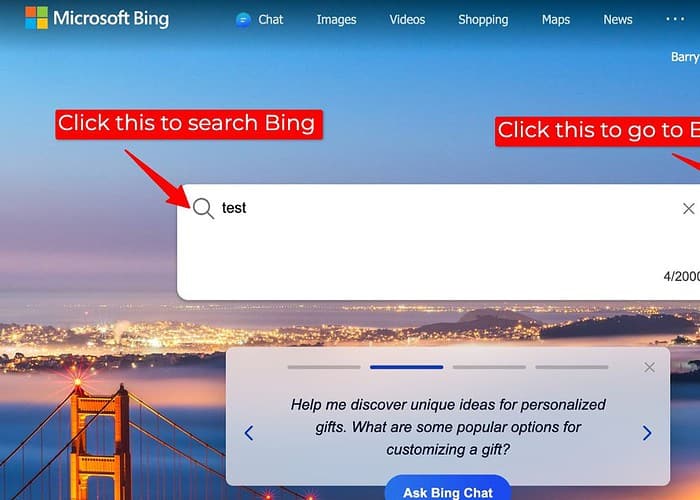

The discussion stemmed from a user reporting inaccurate search results on Bing when its search plugin, which accesses external web data, was disabled. In response, Mikhail Parakhin, CEO of Advertising & Web Services at Microsoft, acknowledged the possibility of LLMs “making things up” in such situations.

He explained that when deprived of the vast information available through the web, LLMs can sometimes resort to their internal knowledge base, a collection of text and code used for training, to generate responses. However, This internal generation may not always be accurate or aligned with factual reality, leading to potential discrepancies in search results compared to those obtained with the search plugin enabled.

To me, this raises important questions about transparency and accuracy in LLM-powered searches, especially when external data sources are unavailable. When LLMs generate responses without accessing external data, users should be given a clear indication of the information’s source and any potential limitations.

While providing answers is valuable, LLMs must prioritize reliable information over filling knowledge gaps with potentially inaccurate internal generations. Exploring alternative approaches, such as indicating uncertainty, suggesting further research, or simply stating that an answer is unavailable, could enhance trust and prevent the spread of misinformation.

Hence, it is no wonder why people prefer ChatGPT over Bing Chat/Copilot.

And for these reasons, I personally prefer using Bard because Google has provided a functionality where Bard lets users know if the information is referenced from somewhere else or not, making it easier for users to trust the information.